Unkover your competitors’ Marketing Secrets

Say goodbye to wasting hours on competitor analysis by equipping your team with an AI-driven, always-on competitive intelligence platform.

Say goodbye to wasting hours on competitor analysis by equipping your team with an AI-driven, always-on competitive intelligence platform.

Stay Ahead with AI-DRIVEN Competitive Intelligence

Unkover is your AI-driven Competitive Intelligence team delivering critical updates about your competitors the moment they happen:

Track your competitors website changes

Why spend all day stalking the competition when you don’t have to?

With Unkover, you’ll know instantly when your competitors tweak their messaging or shake up their pricing. No more endless scrolling through their sites or second-guessing your strategies.

Let us do the heavy lifting for you, ensuring you’re always in the loop by notifying you the moment a critical change happens on your competitor’s pages.

Sit back, relax, and keep winning—Unkover makes sure you’re not just in the game, you’re always a step ahead.

Read your competitors emails

Companies love updating their customers and prospects about relevant news, product updates, and special offers.

That juicy info from your competitors? It’s yours too. Unkover will automatically capture all their emails and bring them right to your doorstep—accessible to your entire team, anytime.

[COMING SOON: Our fine-tuned AI will sift through these emails, extract key information and send them over to the best team within your org. Less noise, more signal!]

We hear you! Unkover’s goal is not to flood you with tons of data points that no one in your team will ever read. We gather competitive intelligence from thousands of data sources and use AI to highlight actionable information to the right team in your company.

Say goodbye to noise. We’re 100% signal.

ROADMAP

We’re excited to get Unkover in your hands as soon as possible and keep building the best competitive intelligence tool with your precious feedback. The roadmap for the next few months is already exciting, so take a look!

While we build and deliver, here’s our promise to you: as an early tester and customer, you’ll lock in an exclusive bargain price we’ll never offer again in the future.

Spy on your competitors’ full marketing strategy: social, ads, content marketing, email flows, and more.

Track competitive Win/Loss analysis and build battle cards. Get alerted at every pricing change.

Get immediate alerts when competitors announce new features or major releases. Identify strengths and weaknesses from online reviews.

Get the competitive intelligence you need where you need it: Slack, eMail, MS Teams, Salesforce, Hubspot, Pipedrive and more.

slack integration

Unkover’s Slack integration lets you keep your whole team up to speed with your competitors’ updates.

Join now to lock in an exclusive 50% lifetime discount

For startups and small teams, it’s the essential toolkit you need to keep an eye on a select few competitors.

Up to 5 competitors

50 pages monitored

10 email workflows

3-day data refresh

$39

/per month

$ 79

50% discount

Billed annually

For growing businesses, it allows you to monitor more competitors, pages, and email workflows.

Up to 10 competitors

100 pages monitored

20 email workflows

1-day data refresh

$79

/per month

$ 159

50% discount

Billed annually

For large companies, it is tailored to meet the needs of multiple teams needing granular insights.

Custom number of competitors

Custom number of pages monitored

Custom number of email workflows

Hourly data refresh

Custom price

Billed annually

How much data is required to power a lead-scoring model? How many attributes typically drive predictions that are reliable enough to be accurate and actionable?

Typically, the standard answers to these questions are “it depends” or “the more, the better.” And honestly, these are fair answers.

Unfortunately, they aren’t particularly helpful when you’re trying to determine whether or not to invest in lead scoring or trying to establish what your lead scoring model should look like.

The reality is that once you understand how lead scoring differs from purely predictive modeling in the sense of AI and ML, you can start wrapping your head around how to tackle it. And once you do that, it becomes apparent that you don’t need that much data; you just need the correct data.

The terms artificial intelligence (AI) and machine learning (ML) are often used interchangeably; in reality, they are related but distinct concepts. You can learn more about the difference between AI and ML, but here’s a basic breakdown:

And in this article, we will focus on the popular idea that AI and ML can take in first-party and third-party data, analyze it, and then offer predictions based on that data. We’re going to look at how this is possible with lead-scoring tools.

With lead scoring, several different types of predictive algorithms are applied to historical data sets in order, in the context of lead scoring, to predict the likelihood of a lead becoming a customer.

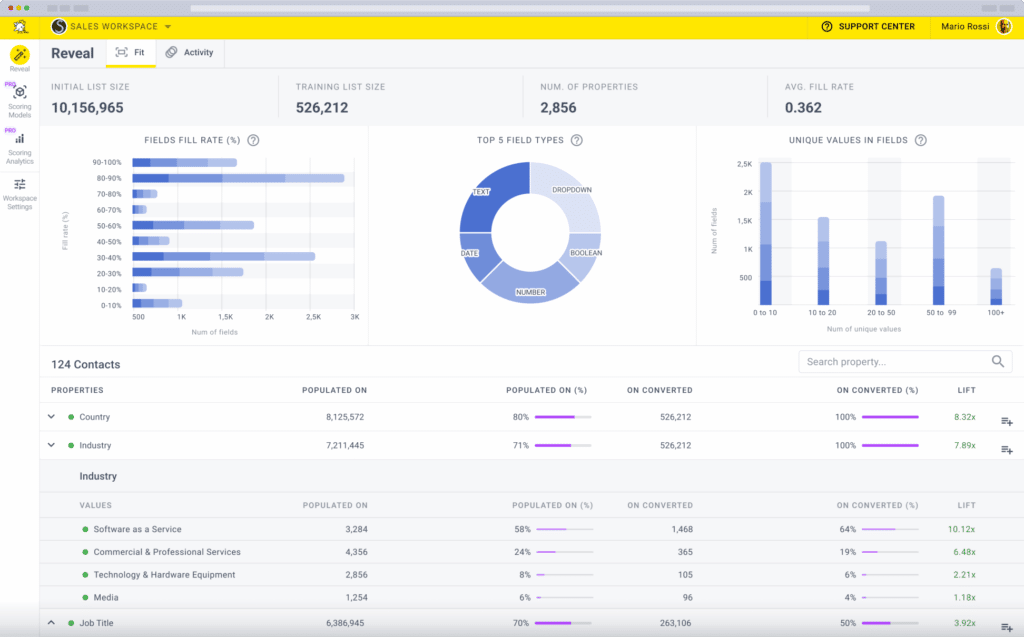

At Breadcrumbs, we’ve actually built a tool called Reveal that is at the core of our ML strategy. Reveal is designed to help you find your ideal customer profile (ICP) by comparing a particular audience segment of your choice to your overall list of contacts. It utilizes machine learning to reveal both “fit” and “activity” traits of different contacts or leads that can help indicate whether or not someone is a higher-intent lead.

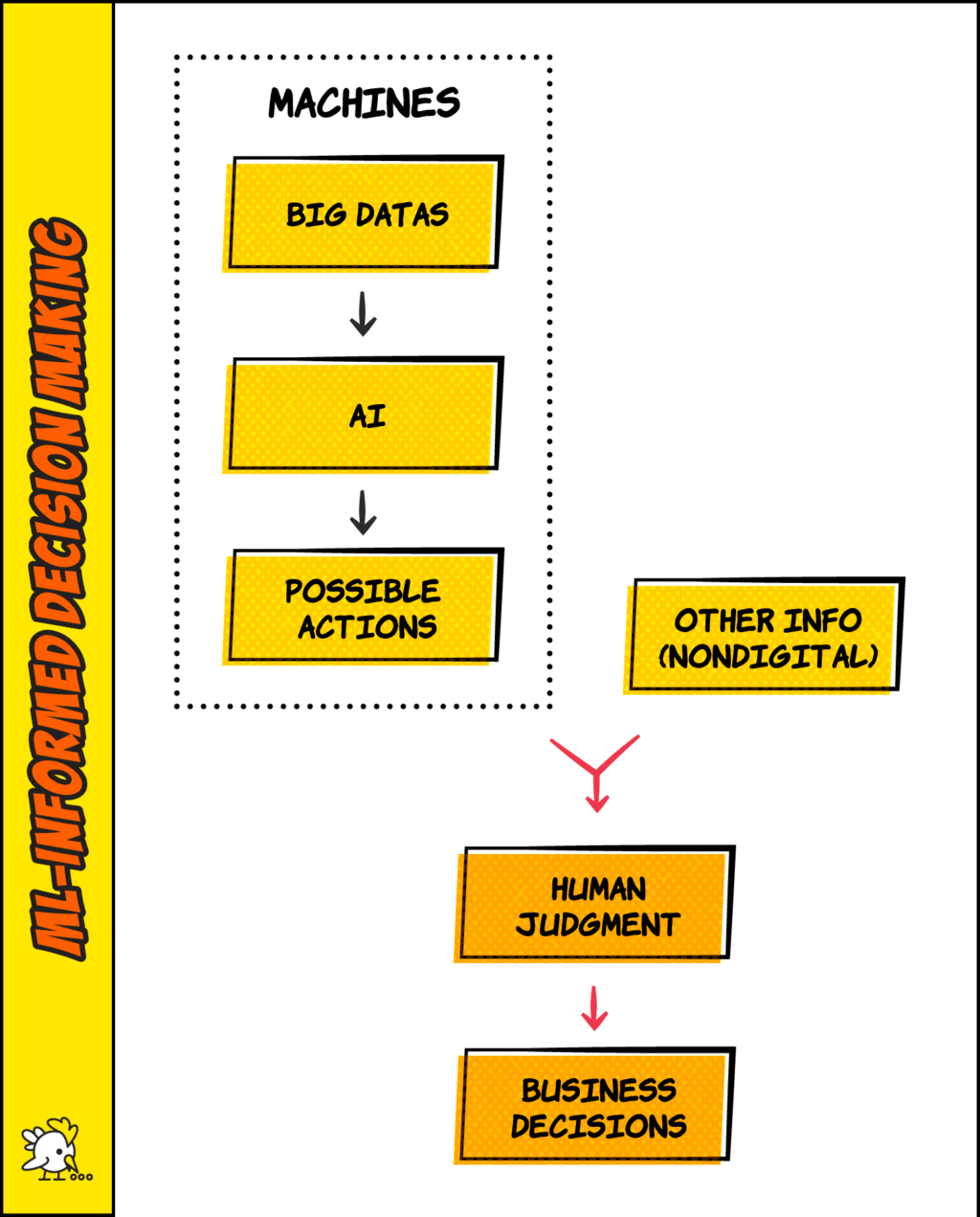

So, lead scoring can be powered entirely or simply informed by AI or ML, but lead scoring itself is not necessarily either in and of itself. The logical follow-up question is then, “why would you choose one approach or the other?”

The answer comes down to one simple explanation: Control.

You want to benefit from all that data and analysis, but you want to be able to interpret it, control it, and define your lead models for yourself.

There are two main reasons why maintaining control or the ability to intervene in your production lead-scoring model is essential:

Ultimately, the decision comes down to the amount of control and agility you desire. But you still don’t know how much data you need. Let’s dive into that next.

The answer to this question involves a few things that seem very unpopular now: Nuance and ambiguity.

I suggested earlier that the typical answer to the how much data is enough data question is either “it depends” or “the more, the better.” “It depends” is the better of these two seemingly unhelpful answers, in my humble opinion.

So why does it depend on? Well, it’s basically down to what you are trying to predict, your current data, and your philosophy around how best to leverage lead scoring. In terms of predictive modeling, there are two general rules of thumb regarding how much data is enough.

The 10X rule, or “the rule of 10,” operates on the principle that you need approximately 10x the number of model parameters as training models. AKA, you need a 10:1 ratio of training samples: model parameters.

This can create a stronger, well-performing model because it allows you to take in a wide variety of different data and gives your system a much more “well-rounded” look at the data, for lack of a better word, to account for inconsistencies or other factors impacting data performance.

Without enough training models, essentially, you can’t trust that the model you develop will be accurate or reliable.

The Equal-Time Rule seeks to provide accurate models by accounting for things like changes in seasonability or outcomes based on a sales cycle, and it ensures that you’re looking at data sets equal to the period of time in question.

In simpler terms, here’s what this means: Seasonality is normal in businesses, and different factors can impact sales performance outcomes. So there may be an accounting software that runs a deal for enterprise-based businesses at the beginning of every new tax year; that’s a seasonal event that you’ll need to consider.

If you want to create accurate models, then you need to understand how things like seasonality are impacting your existing results. You can learn more about this here.

Every good sales team needs a great customer relationship management (CRM) tool backing them up. …

Over the past decade, we’re sure that you’ve noticed that your marketing tech stack options…

HubSpot is one of the most powerful and popular CRM tools on the market, offering…

I know; we still haven’t really answered this question yet. We’re getting there!

It isn’t so much about “how much” data is needed as it is about relevant, accurate data and tracking the data points that matter.

You likely already have or know what the right signals are for your specific business. You may know that your ideal customer profile doesn’t really have a set age in business that’s important, for example, but you know that you are working exclusively with businesses that have revenue over 100k annually. That’s a crucial data point.

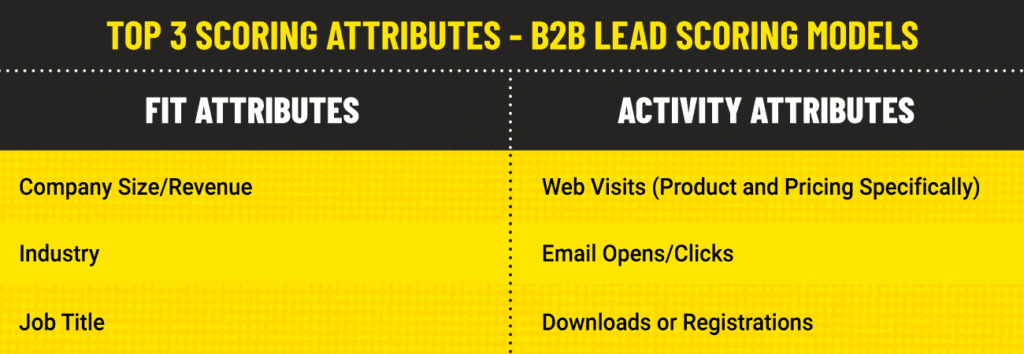

The trick is in fine-tuning the recency and frequency variables of your activity scoring model. From experience, the top three attributes across fit and activity for many B2B businesses are:

If you have a product-led model, several additional actions should make their way into your model, including hitting paywalls, admin actions like adding users and increased usage in general.

By no means is my pragmatic description exhaustive; again, there is an additional nuance not covered here, but I can confidently say that whether a traditional manual model or a fully ML-driven model, the scoring attributes listed above will be present more than 80% of the time and often drive 80% of the prediction.

So, this brings us to Breadcrumbs.

Breadcrumbs is a lead-scoring tool that utilizes machine-learning data to suggest different models (including lead scoring, upselling, and cross-selling) based on your past data sets. While we make suggestions, however, you’re in full control; you can split test different variables, including how recency and frequency impact a contact’s score, so you can find the model that works best for your specific brand, customer base, and sales cycle.

Breadcrumbs takes fit, activity, frequency, and recency into consideration, so every aspect of a lead’s qualities are taken into account when calculating their scores.

Ultimately, larger, more robust data sets enable higher accuracy predictive models.

However, this should not be an excuse to opt out, as the ideal approach involves continuous learning and optimization regardless if the approach is closer to a traditional manual lead scoring model, a fully ML/AI-driven approach, or a hybrid approach like the one enabled by Breadcrumbs.

Ready to generate high-performing lead-scoring models? Get started with Breadcrumbs for free!